The GenAI Governance Checklist – Best Practices for Starting with GenAI

By Teqfocus Team

19th Jun, 2024

Table of contents

The rise of Generative AI (GenAI) is reshaping industries and redefining what’s possible. From crafting compelling marketing campaigns to accelerating drug discovery, its potential is undeniable. However, this transformative power comes with a responsibility to use it ethically, fairly, and responsibly. A robust governance framework is paramount to navigating the complexities and unlocking the full potential of GenAI.

85% of CEOs believe that AI will significantly change the way their company does business within the next five years, making ethical considerations paramount.

Why GenAI Governance Matters

The stakes are high. GenAI models, while incredibly powerful, can also generate misleading content, perpetuate biases, and even infringe on privacy. A 2023 study by McKinsey found that companies with strong AI governance are 3x more likely to achieve their AI objectives. Furthermore, robust governance builds trust with customers, partners, and regulators, paving the way for long-term success.

Why GenAI Governance is Non-Negotiable

GenAI, while incredibly powerful, presents unique challenges that necessitate a well-defined governance structure;

- Inherent Bias – GenAI models are trained on massive datasets, often reflecting societal biases. Unchecked, this can lead to discriminatory or unfair outputs, potentially causing harm and reputational damage.

- Misinformation and Deepfakes – The ease of generating realistic text, images, and videos raises concerns about the spread of misinformation and the creation of convincing deepfakes. These can erode trust, manipulate public opinion, and even incite violence.

- Intellectual Property (IP) Concerns – GenAI can produce content that closely resembles existing works, blurring the lines of copyright and ownership. Without clear guidelines, this can lead to legal disputes and stifle creativity.

- Privacy and Security Risks – GenAI models often require access to sensitive data, including personal information and proprietary business data. Mismanagement or breaches can have severe consequences, both legally and reputationally.

- Lack of Transparency and Explainability – Many GenAI models are complex, making it difficult to understand how they arrive at decisions. This lack of transparency can lead to mistrust and hinder adoption, especially in high-stakes applications.

A well-structured governance framework addresses these challenges head-on, ensuring that GenAI is harnessed for good while mitigating potential risks.

Best Practices for Building a Robust GenAI Governance Framework

1. Define a Clear Vision and Strategic Objectives – Begin by aligning your GenAI initiatives with your broader organizational goals. Are you aiming to enhance customer experiences, optimize operations, or drive innovation? Clearly articulating your objectives provides a compass for your governance strategy.

2. Establish a Cross-Functional Governance Team – GenAI touches multiple facets of your organization, from legal and compliance to technology and marketing. Form a diverse team representing various disciplines to collaboratively develop and implement your governance policies. This ensures that all perspectives are considered, fostering a holistic approach.

3. Develop a Comprehensive Policy Framework – Your governance framework should encompass a wide range of policies addressing key aspects of GenAI usage;

- Data Governance – Clearly define how data will be collected, stored, and used, ensuring compliance with all relevant privacy regulations like GDPR or CCPA. Implement robust data anonymization and de-identification techniques to protect sensitive information.

- Model Development and Deployment – Establish rigorous standards for training, testing, and deploying GenAI models. This includes measures to detect and mitigate bias, as well as regular audits to monitor model performance and identify potential issues.

- Content Generation and Usage – Craft guidelines for the ethical and responsible use of GenAI-generated content. Address concerns like plagiarism, misinformation, and deepfakes. Consider implementing watermarking or other techniques to identify AI-generated content.

- Transparency and Explainability – Prioritize transparency by documenting the decision-making processes of your GenAI models. Utilize explainable AI (XAI) techniques to provide insights into how models arrive at conclusions. This fosters trust and enables users to understand the reasoning behind AI-generated outputs.

- Human-in-the-Loop (HITL) – Design workflows that incorporate human oversight and intervention where appropriate. This is particularly important for high-risk applications where errors or biases could have significant consequences.

4. Prioritize Data Quality and Bias Mitigation – The quality of your training data directly impacts the performance and fairness of your GenAI models. Invest in high-quality, diverse, and representative datasets. Actively implement bias mitigation techniques, such as data augmentation, debiasing algorithms, and fairness-aware training procedures.

5. Implement Robust Monitoring, Auditing, and Accountability – Regularly monitor the outputs of your GenAI models to detect and address biases, errors, or misuse. Establish a clear accountability framework, defining roles and responsibilities for ensuring compliance with your governance policies. Conduct periodic audits to assess the effectiveness of your governance measures and identify areas for improvement.

6. Foster a Culture of Education and Training – Equip your workforce with the knowledge and skills needed to work effectively with GenAI. Provide comprehensive training on the capabilities, limitations, and ethical considerations of GenAI technologies. Encourage continuous learning and development to stay abreast of the rapidly evolving landscape.

7. Promote Transparency and Open Communication – Be transparent about your use of GenAI, both internally and externally. Clearly communicate your governance policies, the safeguards you have in place, and the steps you’re taking to ensure ethical and responsible AI use. Engage in open dialogue with stakeholders, including employees, customers, and the public, to build trust and address concerns.

8. Stay Abreast of Evolving Regulations – The legal and regulatory landscape surrounding GenAI is in flux, with new laws and guidelines emerging regularly. Stay informed about these developments and ensure that your governance policies remain compliant. Engage with policymakers and industry groups to shape the future of AI governance.

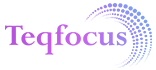

GenAI Governance Framework

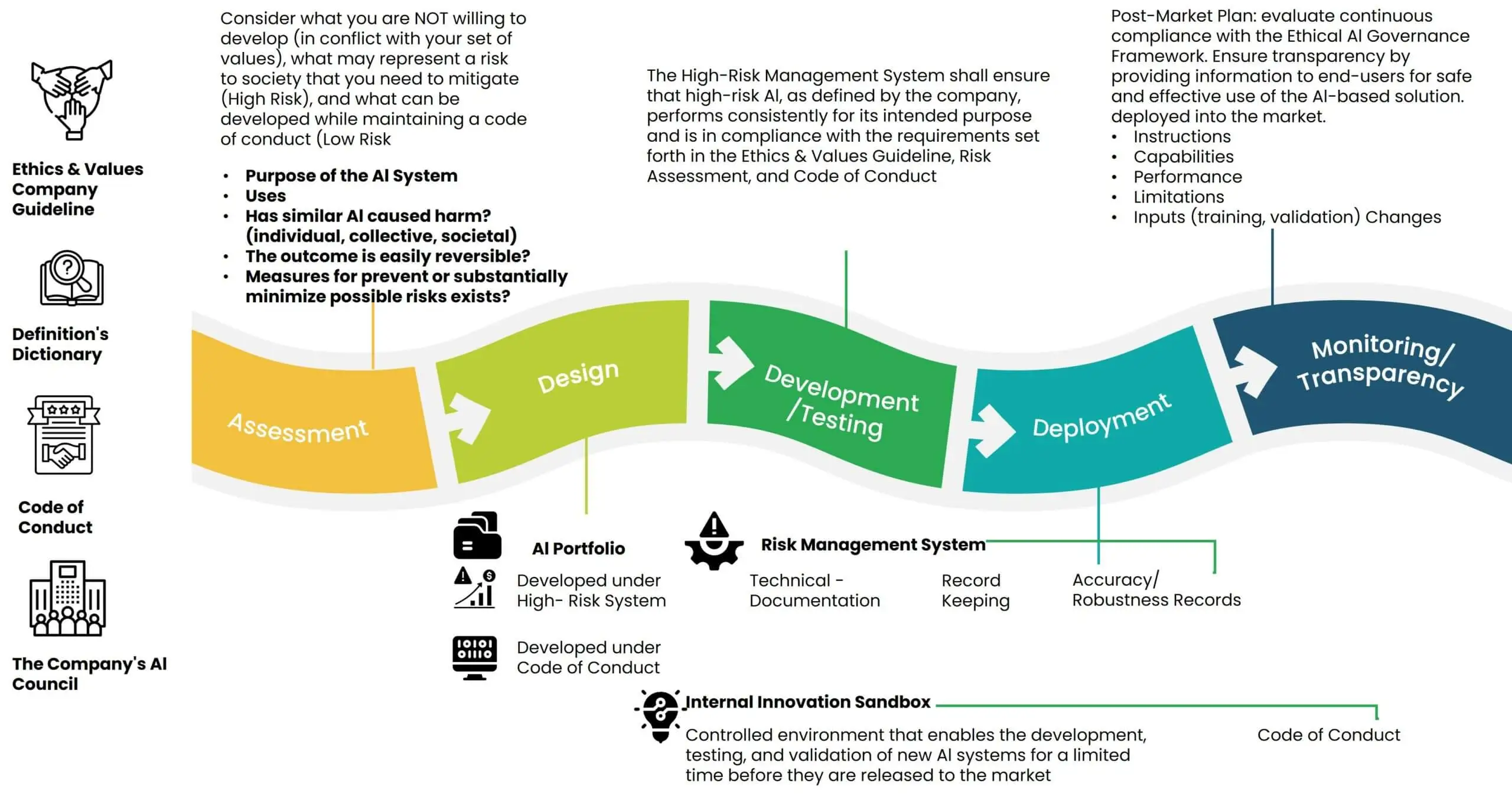

Key Pillars of Effective GenAI Governance

1. Data Privacy and Security – The Foundation of Trust

- Robust data protection – Implement encryption, access controls, and anonymization techniques to safeguard sensitive information.

- Regulatory compliance – Ensure adherence to data protection regulations such as GDPR, HIPAA, and CCPA.

- Data minimization – Collect only the data necessary for AI model training and operation.

- Regular security audits – Conduct periodic assessments to identify and address vulnerabilities.

Example – A healthcare organization using GenAI for patient diagnosis must strictly adhere to HIPAA regulations to protect patient health information (PHI). This includes obtaining patient consent, securely storing PHI, and implementing strict access controls.

2. Ethical AI Use – Prioritizing Fairness and Transparency

- Ethical guidelines – Develop clear policies and principles for ethical AI use, focusing on fairness, transparency, and accountability.

- Bias mitigation – Regularly assess AI models for bias and take corrective action to ensure fairness.

- Explainable AI (XAI) – Use techniques like LIME and SHAP to explain AI decision-making processes to stakeholders.

- Human oversight – Implement mechanisms for human review and intervention in critical AI decisions.

Example – A financial institution using GenAI for loan approvals must ensure the algorithm doesn’t discriminate based on race, gender, or other protected characteristics. Regular audits and bias mitigation techniques are crucial.

3. Model Validation and Monitoring – Maintaining Accuracy and Relevance

- Continuous validation – Regularly test and validate AI models to ensure accuracy and relevance in changing environments.

- Performance monitoring – Track model performance over time and identify potential issues or degradation.

- Model updates – Update models as needed to incorporate new data or address performance issues.

- Version control – Maintain version history of models to enable traceability and rollback in case of errors.

Example – A marketing team using GenAI for content generation should continuously validate the model’s output to ensure it aligns with brand voice and messaging guidelines.

4. Documentation and Traceability – Building Accountability

- Comprehensive documentation – Document all aspects of AI model development, data sources, and decision-making processes.

- Data lineage – Track the origin and transformation of data used for AI model training.

- Audit trails – Maintain records of AI model decisions and actions to enable traceability and accountability.

Example – A software development team using GenAI for code generation must document the model’s training data and decision-making logic to ensure code quality and security.

5. Stakeholder Engagement – Fostering Collaboration and Trust

- Involve stakeholders early – Engage with stakeholders from diverse backgrounds to understand their perspectives and concerns.

- Open communication – Clearly communicate AI governance policies, practices, and potential impacts.

- Feedback mechanisms – Establish channels for stakeholders to provide feedback and suggestions.

- Education and training – Provide training to employees and stakeholders to enhance understanding and trust in AI.

Example – An e-commerce company using GenAI for personalized product recommendations should engage with customers to understand their preferences and address privacy concerns.

6. Compliance and Auditing – Ensuring Adherence to Standards

- Regular audits – Conduct periodic audits to assess compliance with internal policies, external regulations, and ethical standards.

- Independent review – Engage independent auditors to provide unbiased assessments of AI governance practices.

- Continuous improvement – Use audit findings to identify areas for improvement and implement corrective actions.

Example – A government agency using GenAI for decision-making must conduct regular audits to ensure compliance with legal and ethical standards.

7. Risk Management – Proactive Mitigation Strategies

- Risk assessment – Identify potential risks associated with AI deployment, such as bias, discrimination, errors, and security breaches.

- Mitigation strategies – Develop proactive strategies to mitigate identified risks, such as bias mitigation techniques, human oversight, and robust security measures.

- Contingency plans – Prepare for potential AI failures or malfunctions, including rollback mechanisms and alternative decision-making processes.

Example – A manufacturing company using GenAI for predictive maintenance should have contingency plans in place to address potential equipment failures or malfunctions caused by inaccurate AI predictions.

8. Data Governance – Ensuring Quality and Consistency

- Data governance framework – Establish a framework to manage data quality, lineage, and ownership.

- Data quality assurance – Implement processes to ensure data used for AI is accurate, consistent, and complete.

- Data access controls – Define clear policies for data access and usage within the organization.

- Data lifecycle management – Manage the entire lifecycle of data, from collection to storage and disposal.

Example – A research institution using GenAI for data analysis must ensure data quality and consistency to ensure reliable research findings.

Real-World Examples

Leading companies are already implementing robust GenAI governance practices:

- Salesforce – They’ve developed a comprehensive AI Ethics framework that prioritizes fairness, accountability, and transparency.

- AWS – Their Responsible AI initiative focuses on ensuring fairness, explainability, and privacy in their AI services.

- Snowflake – Their Data Cloud Governance framework provides a robust foundation for managing and securing data used in GenAI applications.

According to a McKinsey survey, 63% of executives believe that AI will significantly impact their industry in the next five years. To reap the benefits of GenAI while mitigating the risks, robust governance is a must.

The Future of GenAI Governance

Generative AI offers immense potential, but responsible governance is paramount. By prioritizing data privacy, ethical use, model validation, documentation, stakeholder engagement, compliance, risk management, and data governance, you can harness the power of GenAI while mitigating risks and ensuring long-term success.

GenAI is still in its early stages, and governance practices will continue to evolve. New technologies and applications will emerge, raising new ethical and legal questions. Remember, GenAI governance is not a one-time activity but an ongoing process. By embedding these practices into your organization’s culture, you can create a foundation for ethical, responsible, and trustworthy AI use.